11

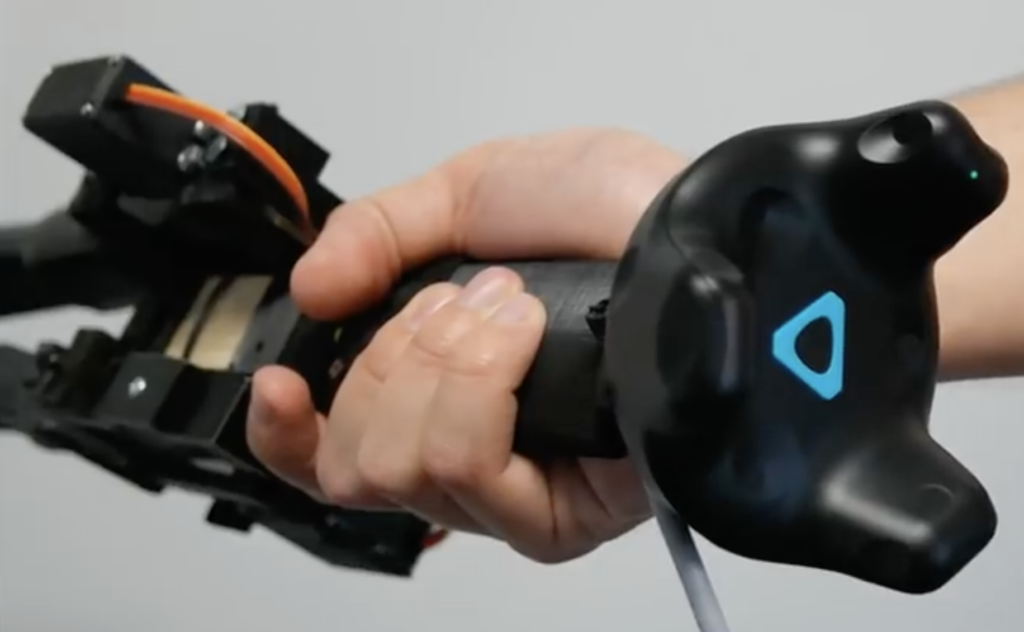

In virtual reality, anything is possible, yet being able to accurately model things from the real-world in a digital space remains a huge challenge due to the lack of weight/feedback that would otherwise be present in physical objects. Inspired by working with digital cameras and the inherit imperfection they bring to their videos, Bas van Seeters has developed a rig that translates the feeling of a camera into VR with only a few components.

The project began as a salvaged Panasonic MS70 VHS camcorder thanks to its spacious interior and easily adjustable wiring. An Arduino UNO Rev3 was then connected to the camera’s start/stop recording button as well as an indicator light and a potentiometer for changing the in-game focus. The UNO is responsible for reading the inputs and writing the data to USB serial so that a Unity plugin can apply the correct effects. Van Seeters even included a two-position switch for selecting between wide and telescopic fields of view.

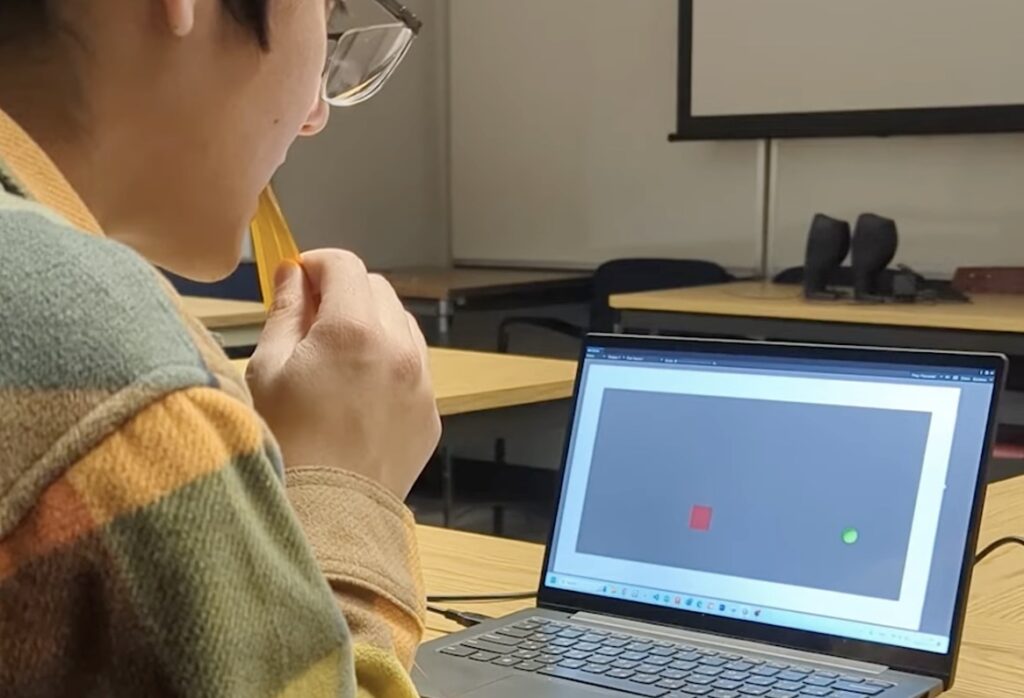

With the Arduino now sending data, the last step involved creating a virtual camcorder object in Unity and making it follow the movement of a controller in 3D space, thus allowing the player to track things in-game and capture videos. More details on the project can be found in van Seeters’ write-up here and in the video below!

The post Getting more realistic camera movements in VR with an Arduino appeared first on Arduino Blog.

![[resize output image]](https://im4.ezgif.com/tmp/ezgif-4-bb1511be6a.gif)