04

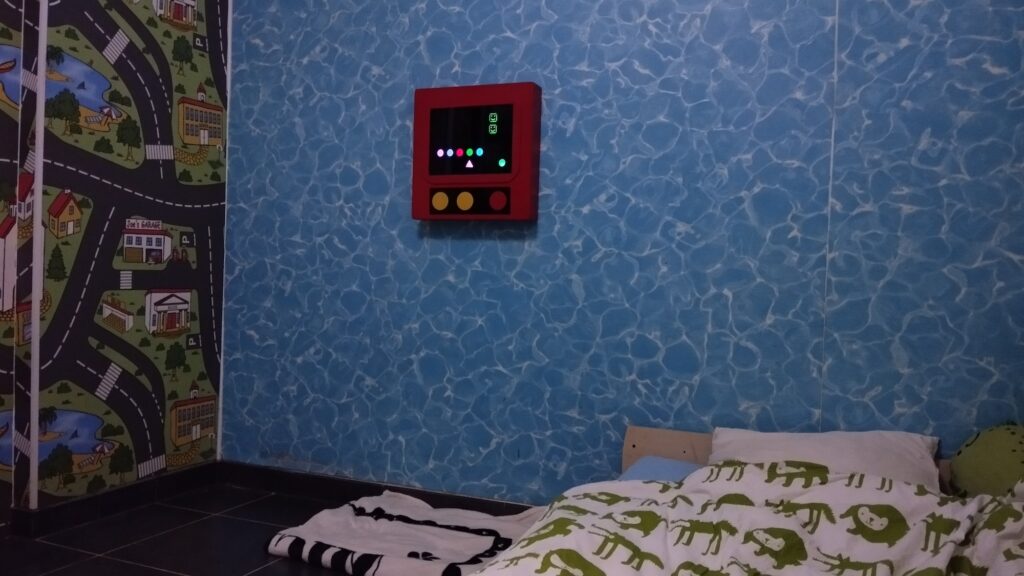

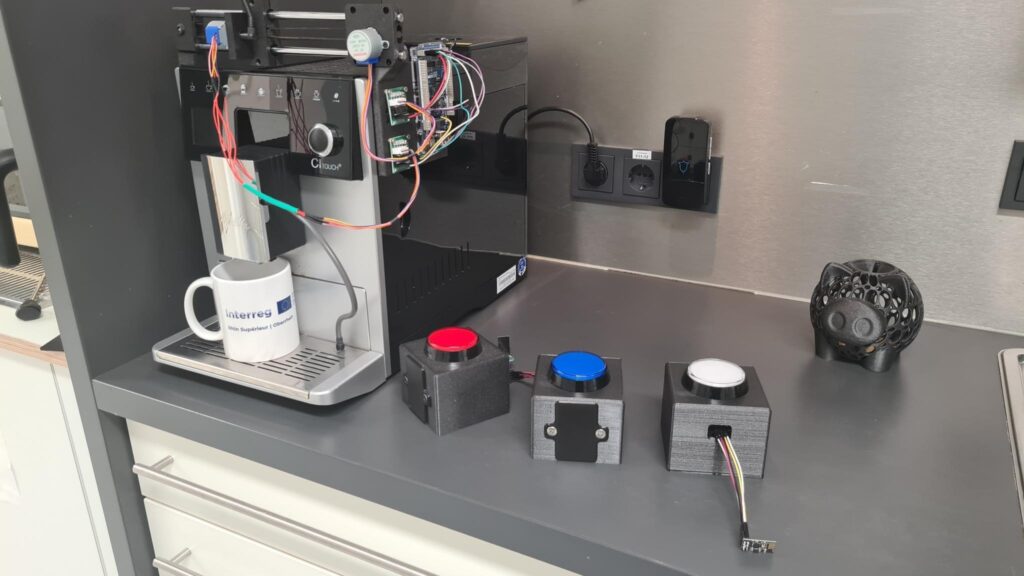

While many of the things we interact with every day have become more usable by people with disabilities, the kitchen remains as one important area of our lives that still lacks many accessibility features. One of these commonplace appliances is the coffee maker and its array of small buttons or even a touchscreen that can be hard to see/touch. Orlie on Instructables has developed a set of wireless buttons and an accompanying receiver that translate simple actions into an easy, end-to-end brewing experience.

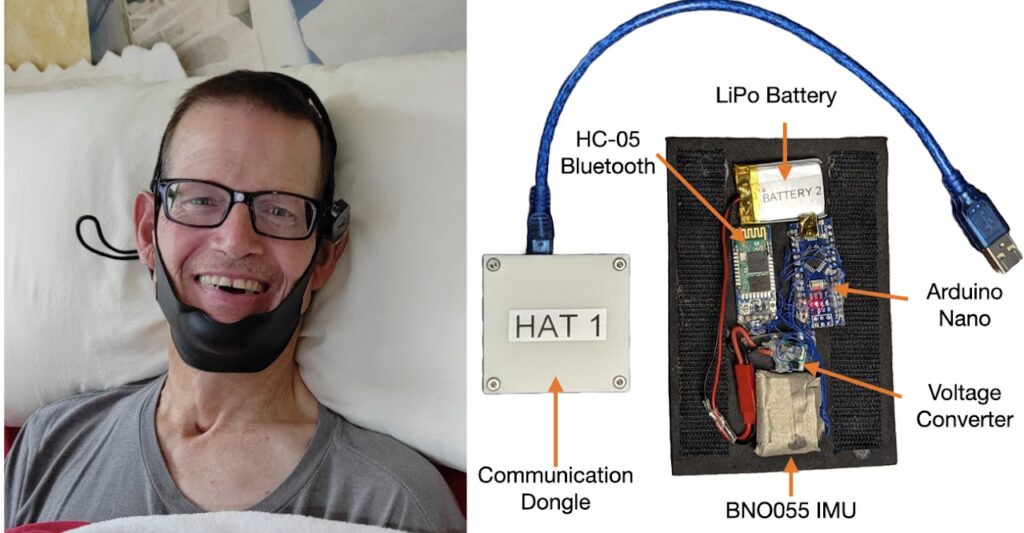

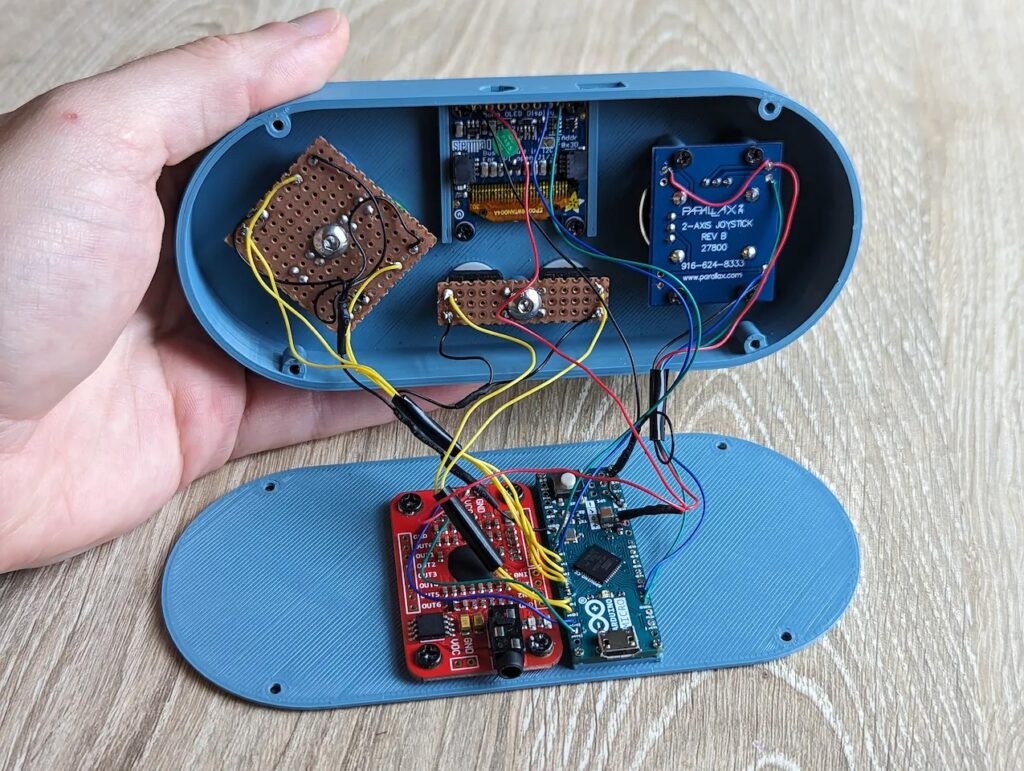

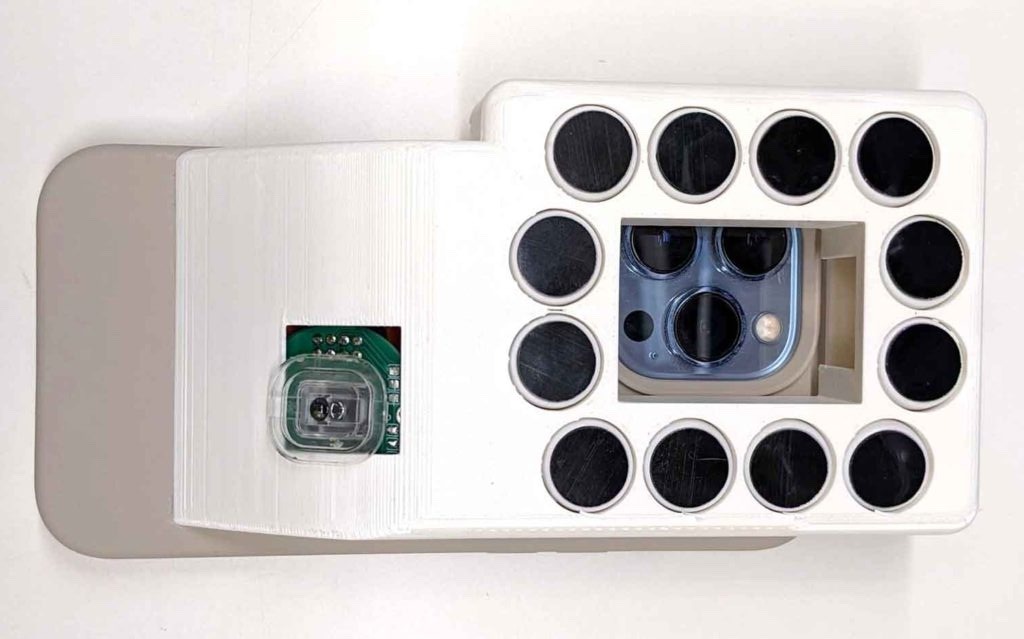

Each button started as a custom 3D-printed shell with compartments for a AA battery holder, large arcade button, and the perfboard that also contained the ESP8266 microcontroller. In this system, the ESP8266 communicates with the Arduino GIGA R1 WiFi board via Wi-Fi and an MQTT message broker running on a host PC. This enables each button to be assigned a unique message that dictates the desired task to be performed.

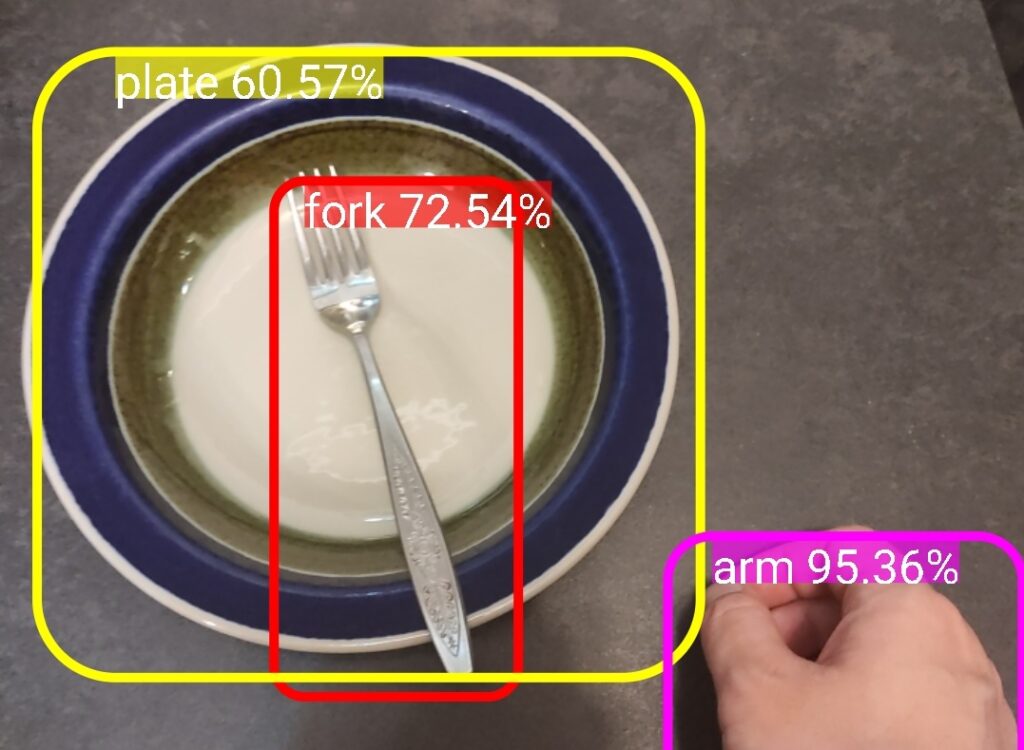

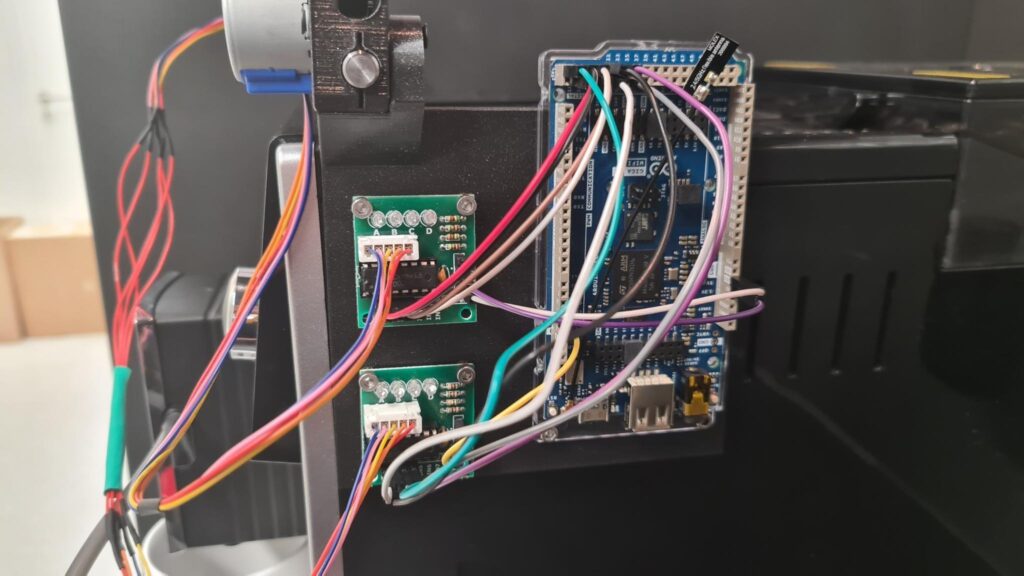

At the coffee maker, the GIGA R1 WiFi was wired into a pair of ULN2003 stepper motor driver modules that move a gantry across a set of linear rails and eventually push the corresponding buttons once the correct position has been reached. Ultimately, this allows for those with less mobility and/or dexterity to select what they want from anywhere in the house — all over Wi-Fi.

To see how this project was built in greater detail, you can read Orlie’s write-up here on Instructables.

The post This Arduino GIGA R1 WiFi project turns a coffee maker into a more accessible appliance appeared first on Arduino Blog.