18

The entire tech industry is desperate for a practical wearable HMI (Human Machine Interface) right now. The most newsworthy devices at CES this year were the Rabbit R1 and the Humane AI Pin, both of which are attempts to streamline wearable interfaces with and for AI. Both have numerous drawbacks, as do most other approaches. What the world really needs is an affordable, practical, and unobtrusive solution, and North Carolina State University researchers may have found the answer in machine learning-optimized fabric buttons.

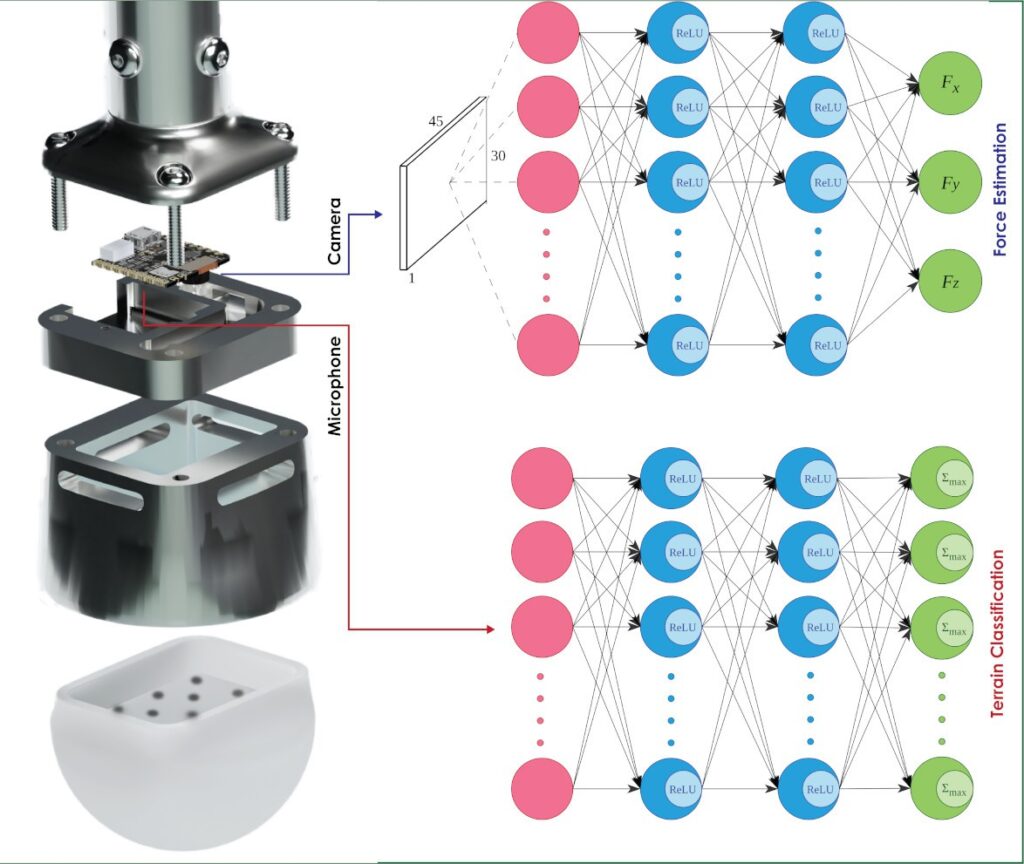

It is, of course, possible to adhere a conventional button to fabric. But by making the button itself from fabric, these researchers have improved comfort, lowered costs, and introduced a lot more flexibility — both literally and metaphorically. These are triboelectric touch sensors, which detect the amount of force exerted on them by measuring the energy between two layers of opposite charges.

But there is a problem with this approach: the measured values vary dramatically based on usage, environmental conditions, manufacturing tolerances, and physical wear. The fabric button on one shirt sleeve may present completely different readings than another. If this were a simple binary button, it wouldn’t be as challenging of an issue. But the whole point of this sensor type is to provide a one-dimensional scale corresponding to the pressure exerted, so consistency is important.

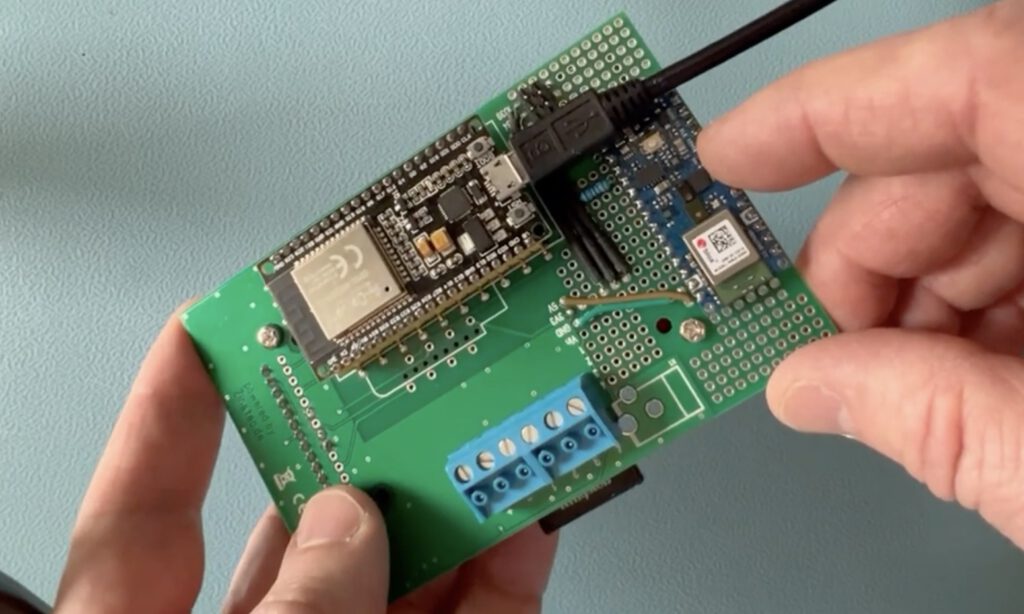

Because achieving physical consistency isn’t practical, the team turned to machine learning. A TensorFlow Lite for Microcontrollers machine learning model, running on an Arduino Nano ESP32 board, interprets the readings from the sensors. It is then able to differentiate between several interactions: single clicks, double clicks, triple clicks, single slides, double slides, and long presses.

Even if the exact readings change between sensors (or the same sensor over time), the patterns are still recognizable to the machine learning model. This would make it practical to integrate fabric buttons into inexpensive garments and users could interact with their devices through those interfaces.

The researchers demonstrated the concept with mobile apps and even a game. More details can be found in their paper here.

Image credit: Y. Chen et al.

The post Machine learning makes fabric buttons practical appeared first on Arduino Blog.