31

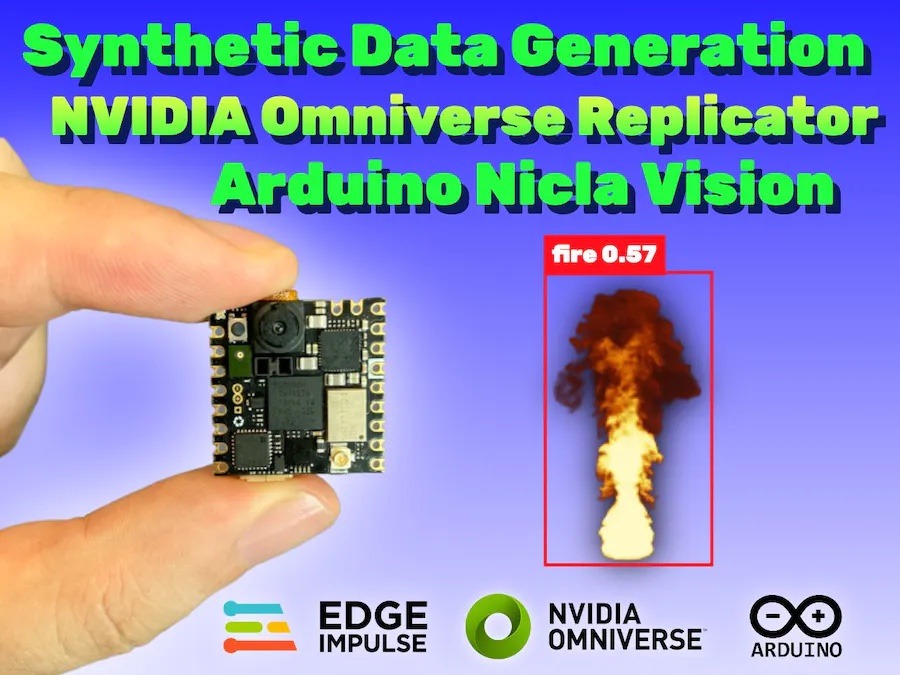

Due to an ever-warming planet thanks to climate change and greatly increasing wildfire chances because of prolonged droughts, being able to quickly detect when a fire has broken out is vital for responding while it’s still in a containable stage. But one major hurdle to collecting machine learning model datasets on these types of events is that they can be quite sporadic. In his proof of concept system, engineer Shakhizat Nurgaliyev shows how he leveraged NVIDIA Omniverse Replicator to create an entirely generated dataset and then deploy a model trained on that data to an Arduino Nicla Vision board.

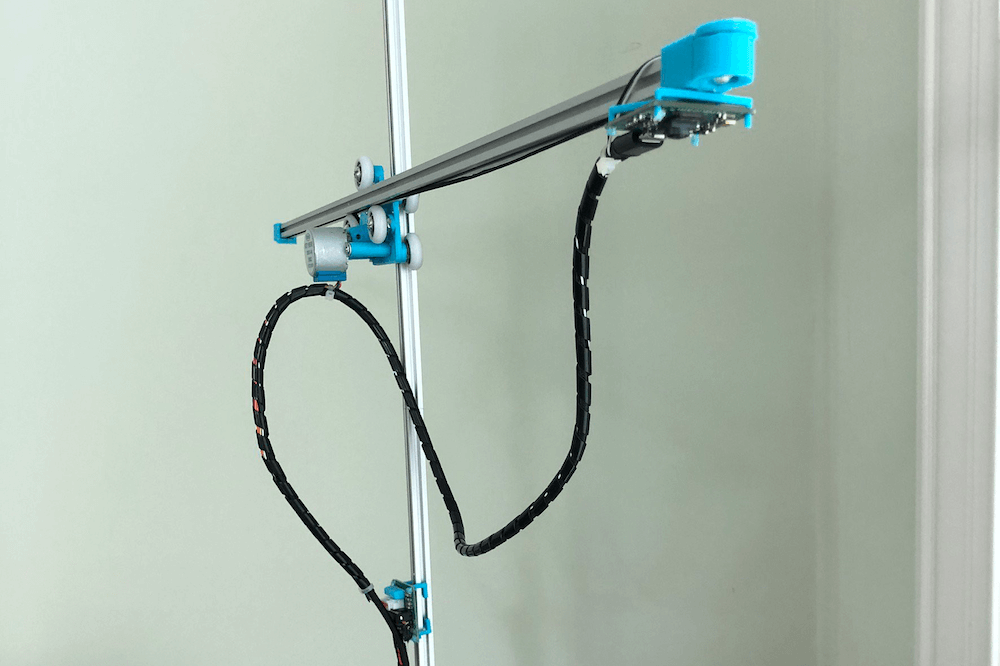

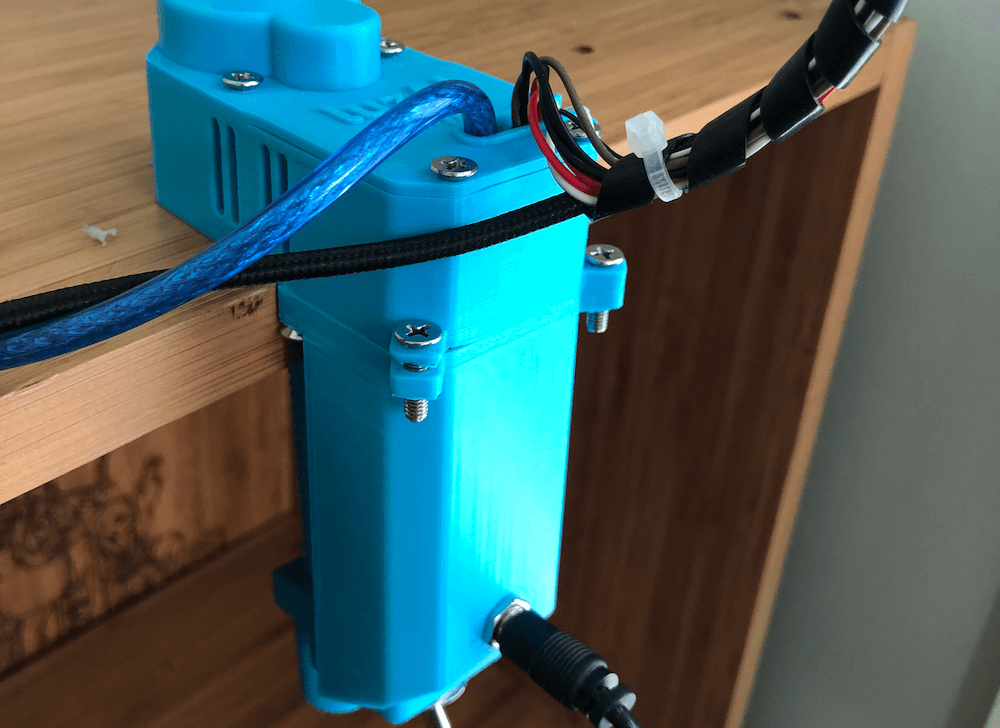

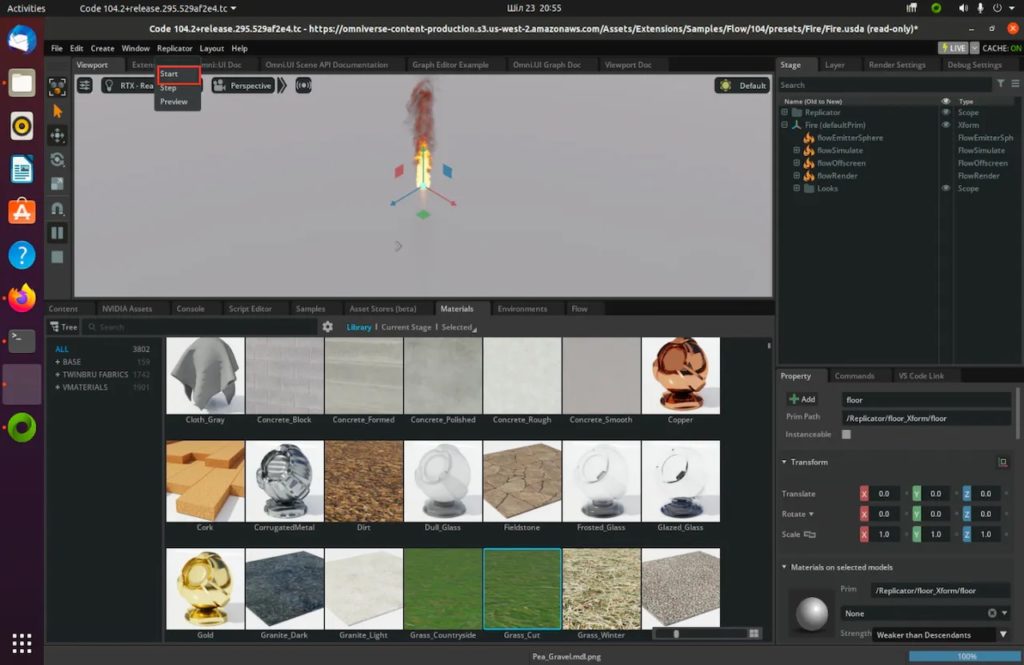

The project started out as a simple fire animation inside of Omniverse which was soon followed by a Python script that produces a pair of virtual cameras and randomizes the ground plane before capturing images. Once enough had been created, Nurgaliyev utilized the zero-shot object detection application Grounding DINO to automatically draw bounding boxes around the virtual flames. Lastly, each image was brought into an Edge Impulse project and used to develop a FOMO-based object detection model.

By taking this approach, the model achieved an F1 score of nearly 87% while also only needing a max of 239KB of RAM and a mere 56KB of flash storage. Once deployed as an OpenMV library, Nurgaliyev shows in his video below how the MicroPython sketch running on a Nicla Vision within the OpenMV IDE detects and bounds flames. More information about this system can be found here on Hackster.io.

The post This Nicla Vision-based fire detector was trained entirely on synthetic data appeared first on Arduino Blog.