04

Percussion instruments are likely the first kind that humanity invented, because they’re quite simple: hit a thing and a noise happens. Different things produce different frequencies with different timbres, and glass bottles have a nice xylophonic sound to them. Because glass bottles are easy to find among discarded garbage, Jens of the Jens Maker Adventures YouTube channel took advantage of them to build this awesome robotic instrument.

Jens started by collecting a bunch of different bottles. He tapped each to while searching to get a sense of the notes they produced, which he could then lower by adding some water to fine tune the pitch. Once he had enough bottles to cover a range of notes, he set out to construct a robot to play them.

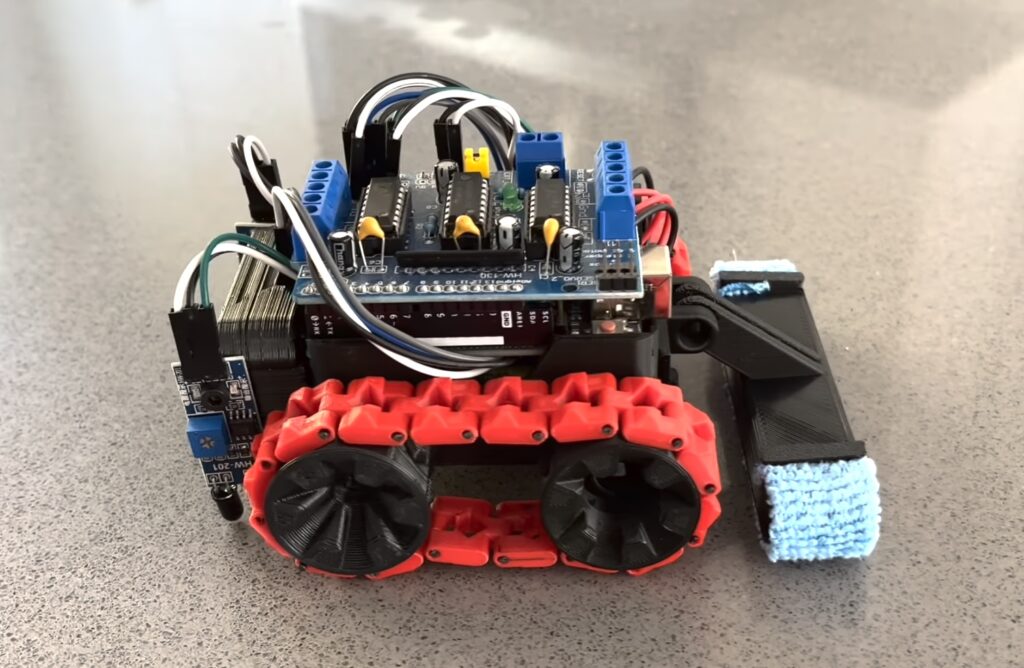

Solenoid actuators tap each bottle and an Arduino UNO Rev3 board controls that tapping. It does so according to MIDI files created in the popular Ableton software. Jens matched the available notes in Ableton to those produced by the glass bottles, so he could simply compose melodies using those notes knowing that the robot could play them. The Arduino reads the MIDI files output by Ableton and strikes the corresponding bottles.

Finally, Jens laser-cut a plywood frame and enclosure that holds the bottles, the Arduino, and the solenoids. It works with seven bottles, which is the number of notes this machine can play.

Jens demonstrated that by playing a guitar along with the robotic instrument and the result sounds very pleasant — especially for something made with garbage.

The post This robot turns old bottles into a musical instrument appeared first on Arduino Blog.