16

Air quality concerns encompass several different pollutants and irritants. Chlorofluorocarbons (CFCs) were, for example, a major concern in the 20th century due to the damage they cause to the ozone layer. But not all pollutants are easy to monitor. Hydrogen sulfide, which causes irritation of the eyes, nose, and throat at low levels and much more serious symptoms at high levels, can collect in pockets. To find them, researchers from Brazil have a developed a low-cost lab-on-a-drone.

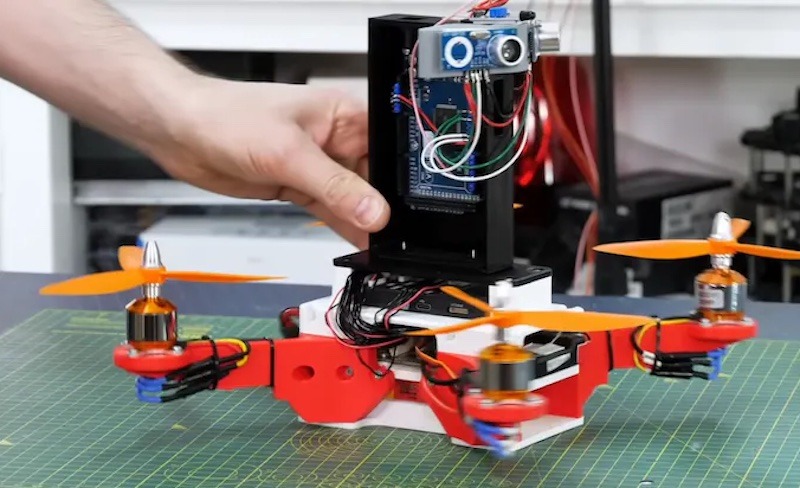

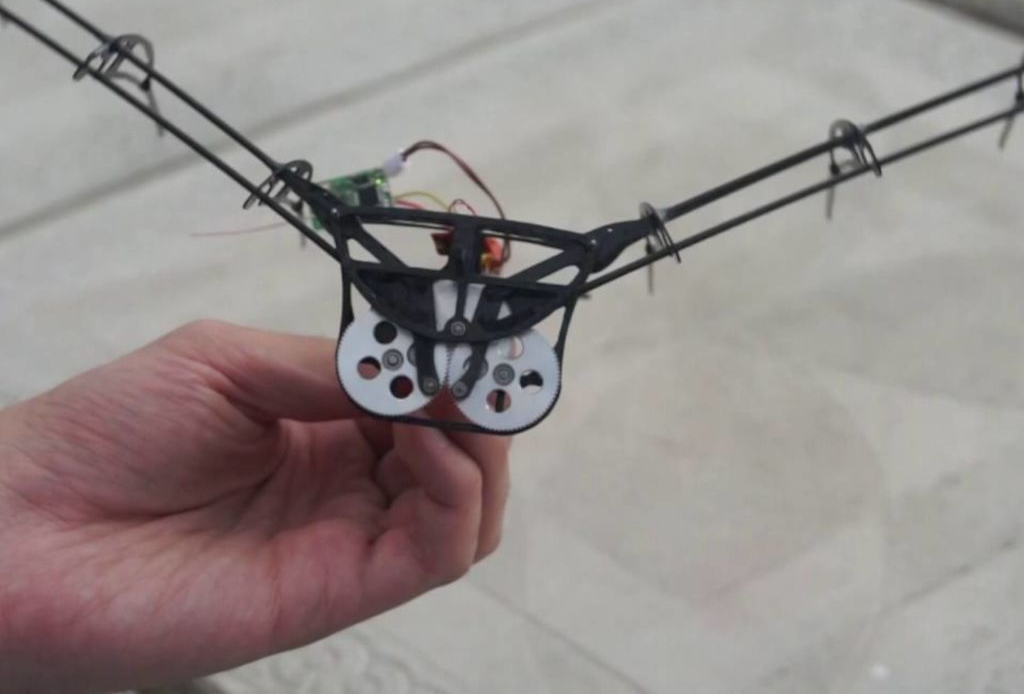

The CDC reports that hydrogen sulfide exposure is a risk for those working in rayon textile manufacturing, petroleum and natural gas drilling and refining, wastewater treatment, and farms with manure storage pits. Because industry isn’t always keen on environmental protection, these researchers wanted a way to find pockets of high hydrogen sulfide concentration. To detect that gas efficiently at a variety of altitudes, they decided a drone-mounted approach was best.

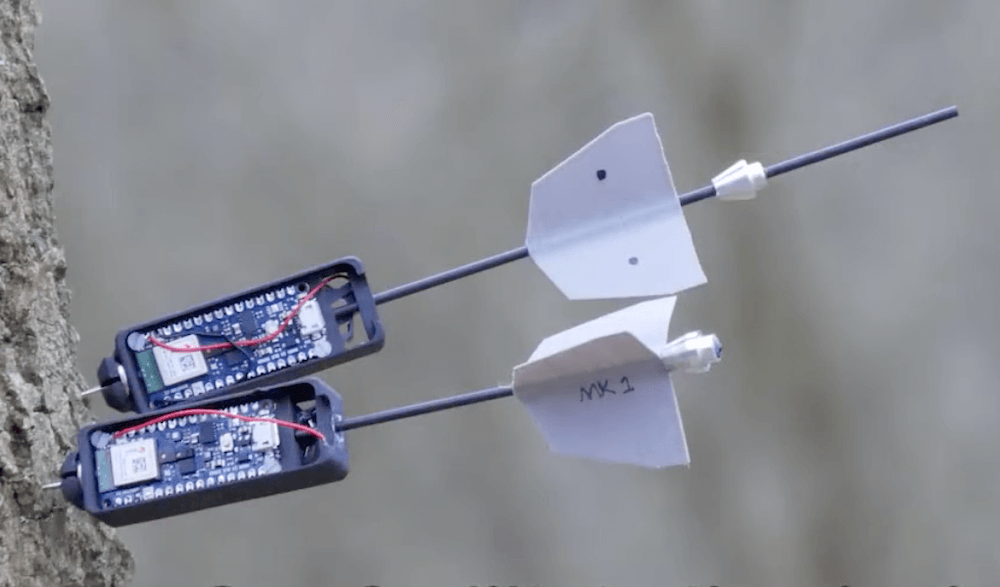

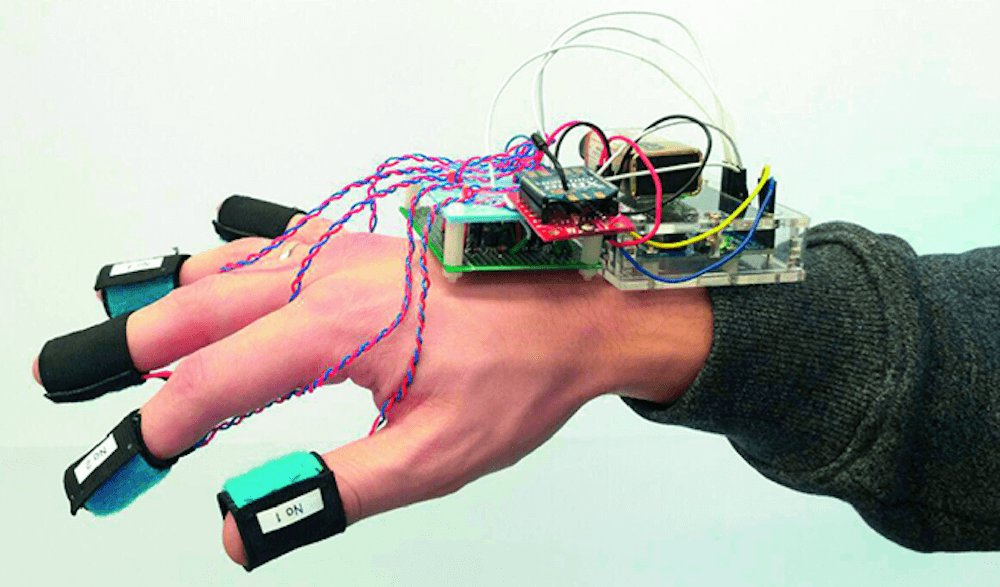

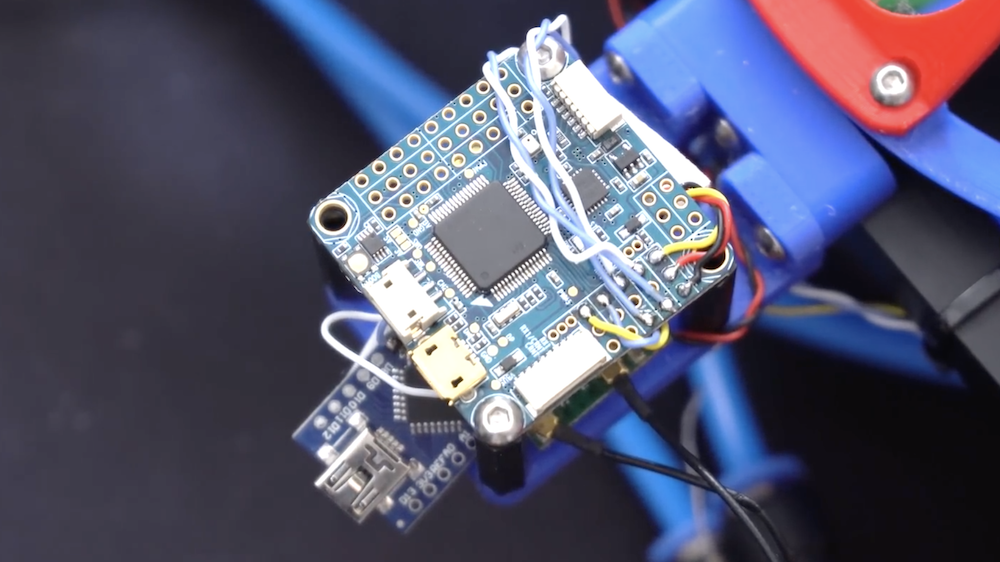

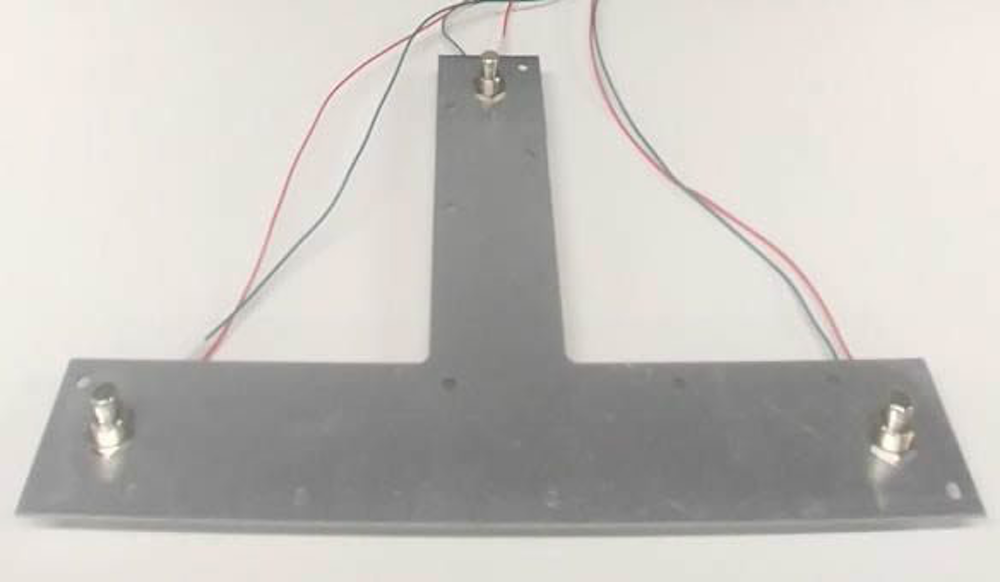

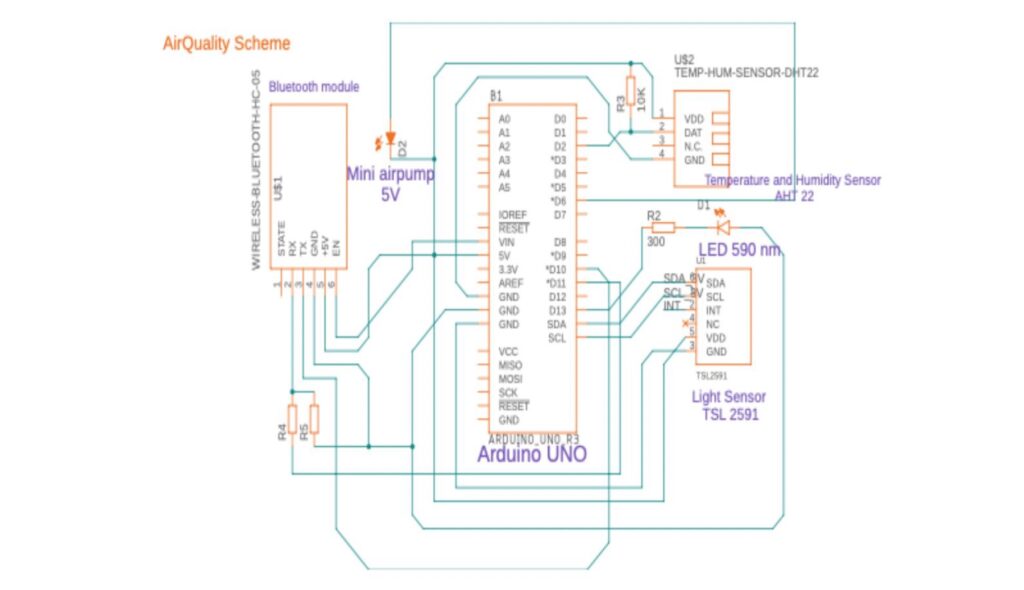

They achieved that by designing a sensor system light enough to be carried by off-the-shelf consumer drones. That payload consists of an Arduino UNO R3 board, the hydrogen sulfide sensor, an air pump for that sensor, and a DHT22 temperature and humidity sensor. It also has an HC-05 Bluetooth® module, so the researchers can monitor readings from anywhere within range.

The team found a significant increase in hydrogen sulfide levels as the drone got higher, indicating that existing sensors on the ground are insufficient for monitoring this kind of pollution. You can read more in their published paper here.

Images credit: Leal et al. Analytical Chemistry, 2023, DOI: 10.1021/acs.analchem.3c02719

The post Lab-on-a-drone detects and analyzes pollutants from the sky appeared first on Arduino Blog.