04

There is no shame in taking advantage of a voice assistant device, like an Amazon Echo with Alexa. Those devices are useful and can add real convenience to your life. But they lack personality and any feeling of a soul—not just because of the stilted voices, but also because of the boring industrial designs intended for mass market appeal. To inject some life into his Amazon Echo, Workshop Nation turned it into a charming animatronic robot.

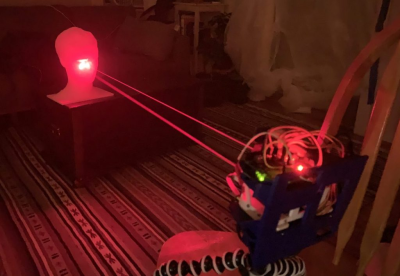

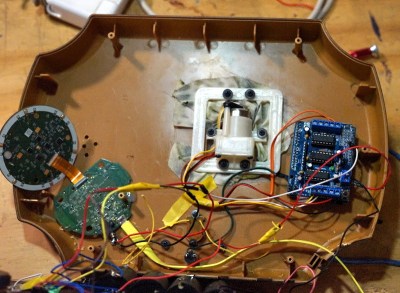

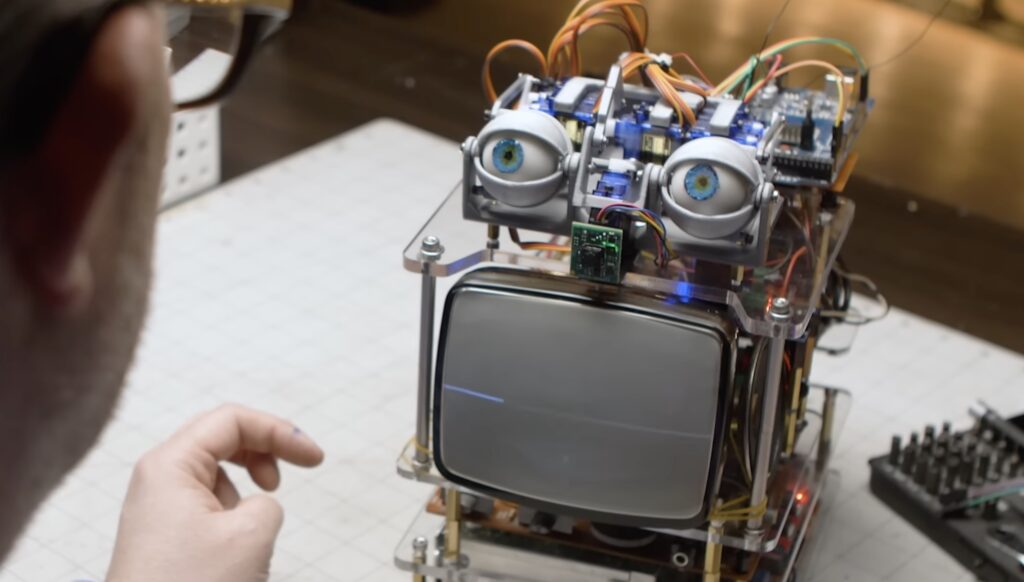

At its heart, this is still an Amazon Echo and it retains all of that functionality. But the Alexa brain now inhabits a body that looks like it was made by a wacky scientist from an ’80s movie featuring robot hijinks. It was cobbled together from salvaged parts, like an old CRT TV, as well as new components. It has 3D-printed animatronic eyes based on a design by Will Cogley and actuated by servo motors. Something akin to a voice waveform appears on the CRT whenever Alexa speaks, which works by using that signal voltage to drive one of the electron beam coils.

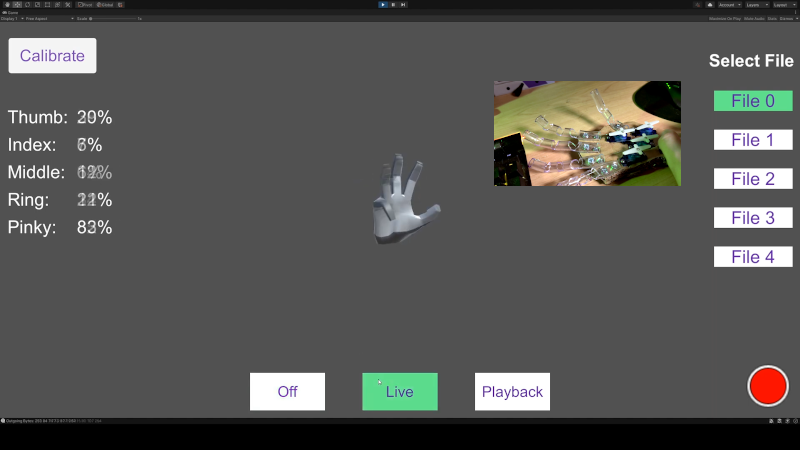

An Arduino Mega 2560 board controls the animatronics and also monitors a Useful Sensors Person Sensor. Its purpose is to direct the movement of the eyes to follow any people in the area. The Arduino also lets the users bypass the normal “Alexa” wake word so they can ask questions starting with whatever term they prefer. Those components (the bulk of which belong to the CRT) all attach to a frame made of laser-cut clear acrylic and threaded rods.

The result is a contraption that combines all of the convenience of a modern voice assistant with the aesthetic appeal of a science fair reject.

The post Amazon Echo becomes charming animatronic robot appeared first on Arduino Blog.