31

While we adults don’t experience them often, school kids practice fire drills on a regular basis. Those drills are important for safety, but kids don’t take them seriously. At most, they see the drills as a way to get a break from their lessons for a short time. But what if they could actually see the flames? Developed by a team of Sejong University engineers, this augmented reality fire drill system takes queues from video games to provide more effective training.

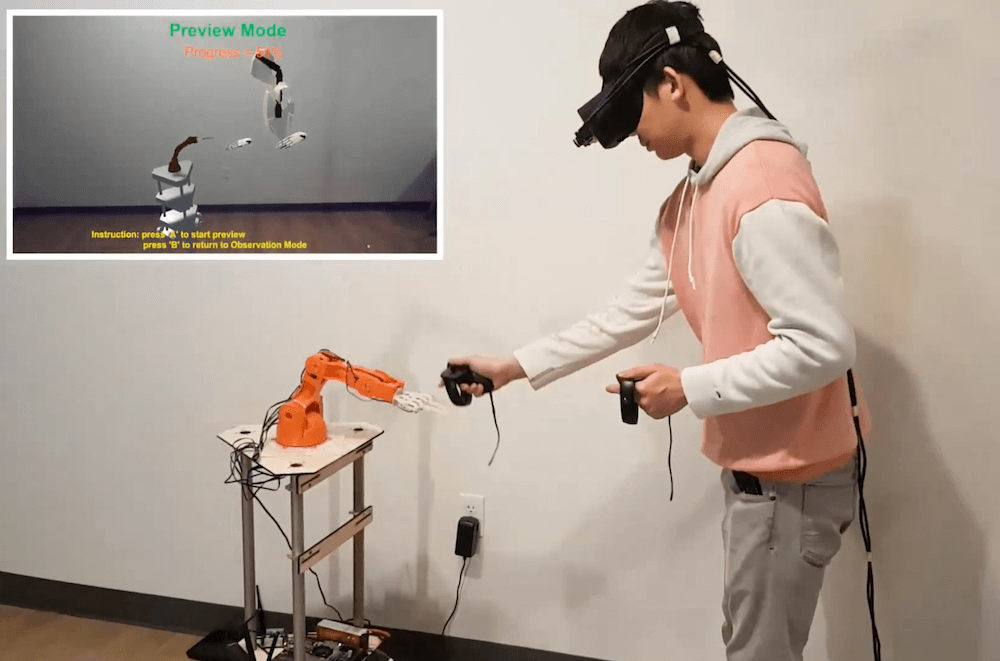

This mixed reality system, which combines virtual reality and augmented reality elements, makes fire drill training more interactive. Instead of just evacuating a building by following a predefined route, participants perform basic firefighting tasks and experience smoke-filled rooms. Using a familiar video game-esque medium, it gives kids a more realistic and believable idea of what an emergency might look like. It is equally useful for adults, because it challenges them to take action.

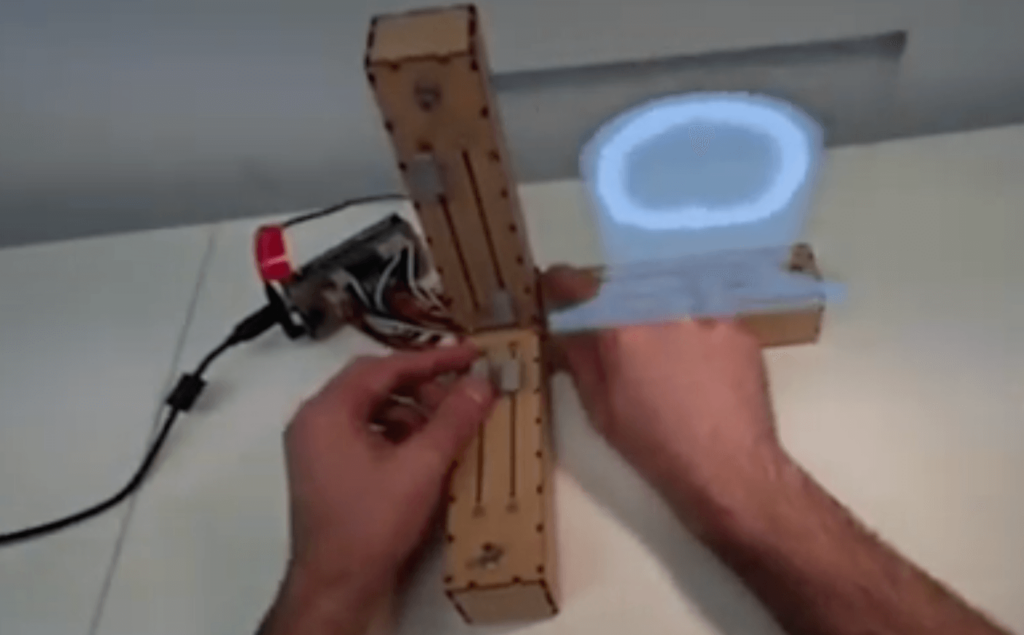

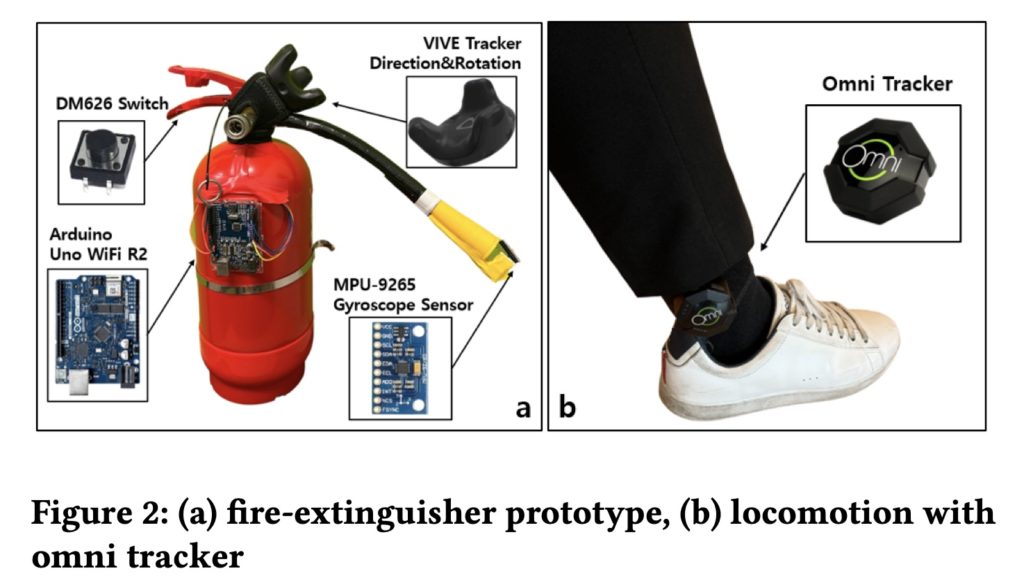

That action comes primarily in the form of virtual fires, which participants much douse using fire extinguishers. The mixed reality visuals are straightforward, as the technology is now mainstream. The VIVE VR system can, for example, recognize objects like tables and overlay flame effects. But the fire extinguisher stands out. Instead of a standard VR controller, this system uses a custom interface that looks and feels like a real fire extinguisher.

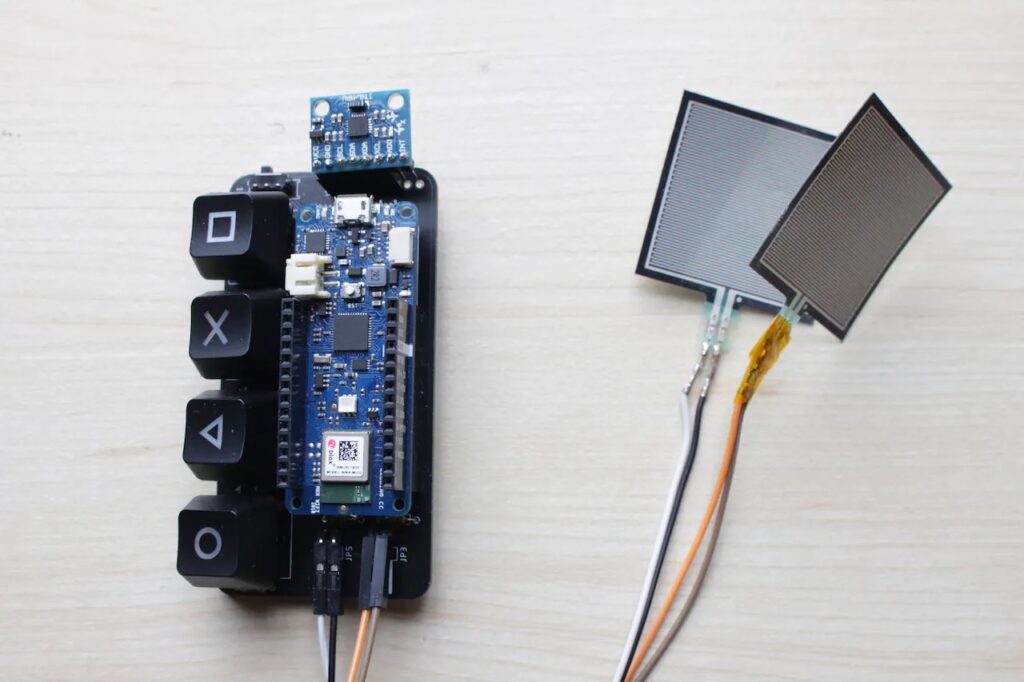

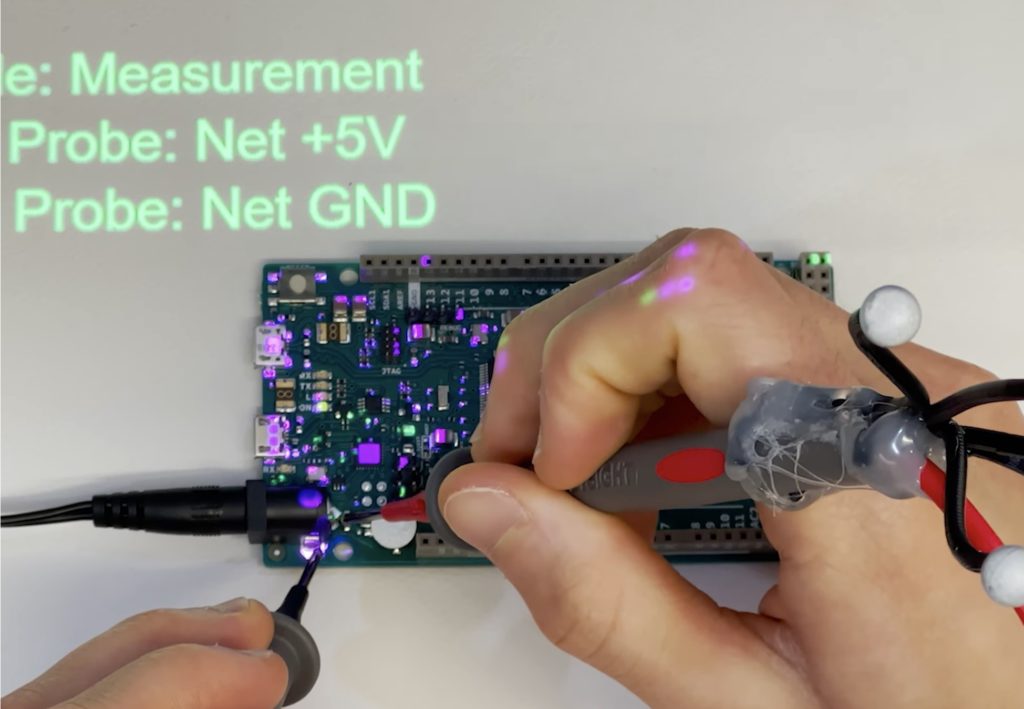

That extinguisher has a VIVE PRO tracker, which lets the system monitor its position. The nozzle has an MPU-9265 gyroscope and the handle has a momentary switch. Both of those connect to an Arduino Uno WiFI Rev2 board, which feeds the sensor data to the augmented reality system. With this hardware, participants can manipulate the virtual fire extinguisher just like a real one. The system knows when users activate the fire extinguisher and the direction in which they’re pointing the nozzle, so it can determine if they’re dousing the virtual fires.

More details on the project can be found in the team’s paper here.

Image credit: Kang et al.

The post Augmented reality fire drills make training more effective appeared first on Arduino Blog.