24

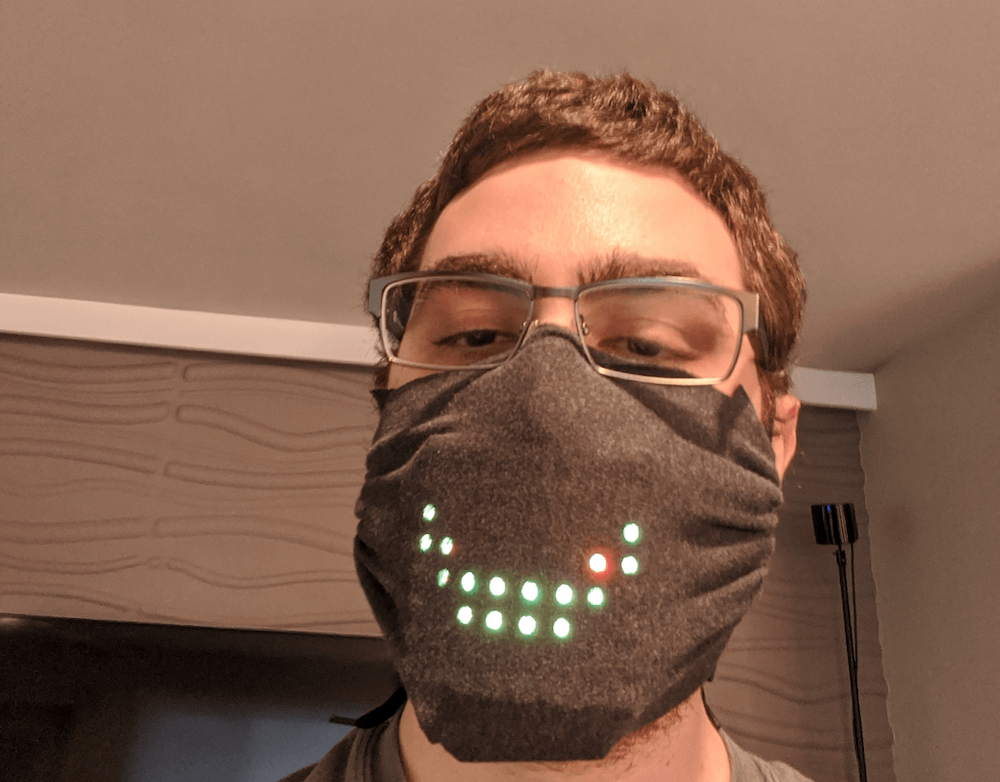

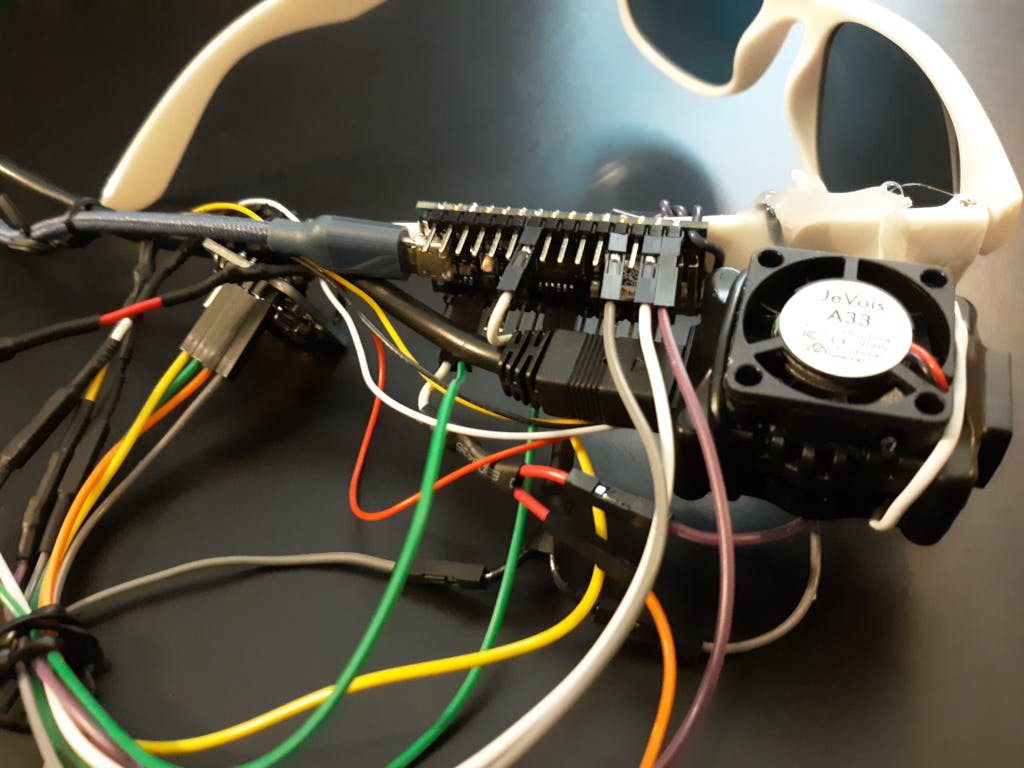

For his school science fair, Mars Kapadia decided to take things up a notch and create his own pair of smart glasses.

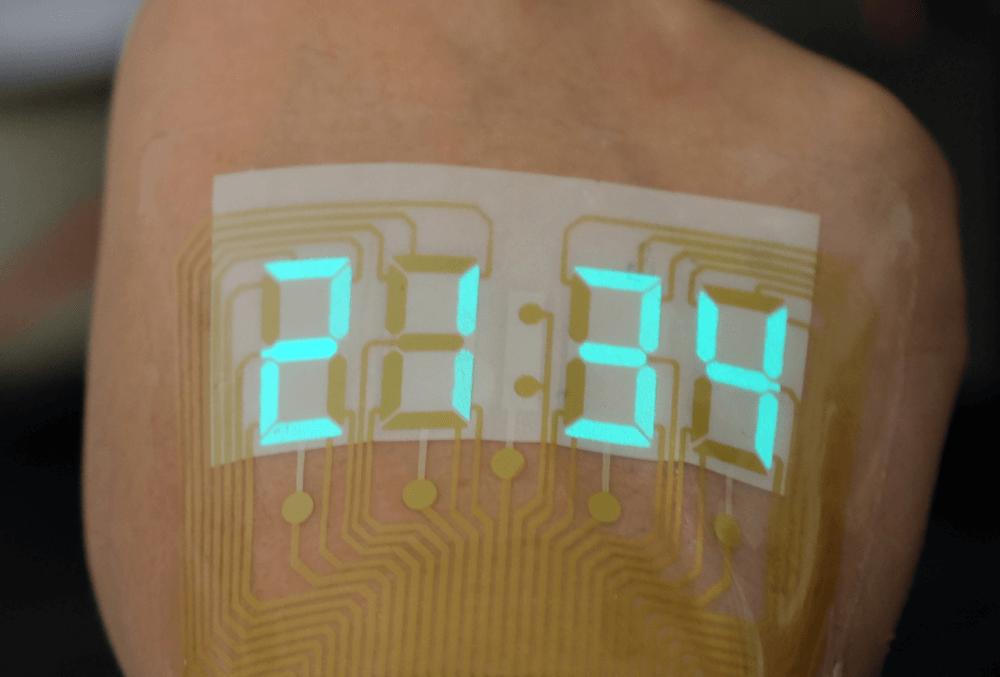

The wearable device, which went on to place in the state competition, uses a transparent OLED display to show info from Retro Watch software running on an Android phone. They’re controlled by an Arduino Nano Every with an HC-05 Bluetooth module to communicate with the mobile app. Power is provided via a LiPo battery.

One unusual feature is that the darkened lenses can be flipped down for sun protection in outdoor environments, then up to allow easy viewing in darker areas. Kapadia demonstrates how his glasses work, plus discusses the technology used in the video below.