10

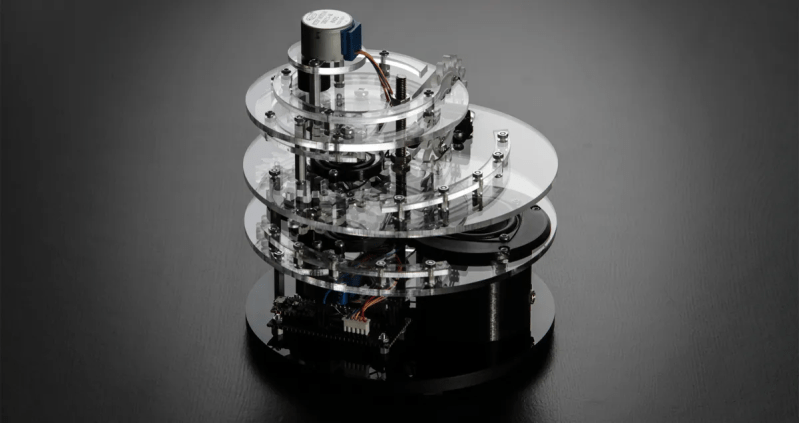

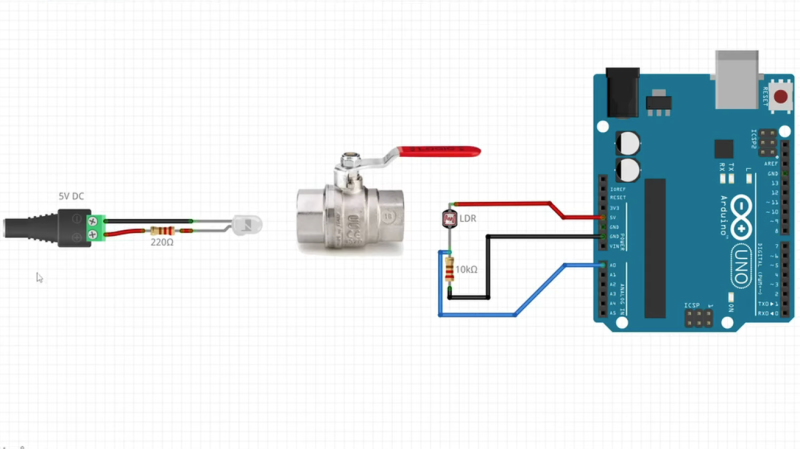

It is one thing to make an artistic steampunk display. But [CapeGeek] added an Arduino to make the display come alive. The display has plenty of tubes and wires. The pressure gauge dominates the display, but there are lots of other interesting bits. Check it out in the video below.

From the creator:

The back-story is a fictional factory that cycles through a multistage process. It starts up with lights and sounds starting in a small tube in one corner, the needle on a big gauge starts rising, then a larger tube at the top lights up in different colors. Finally, the tall, glass reactor vessel lights up to start cooking some process. All this time, as the sequence progresses, it is accompanied by factory motor sounds and bubbling processes. Finally, a loud glass break noise hints that the process has come to a catastrophic end! Then the sequence starts reversing, with lights sequentially shutting down, the needle jumps around randomly, then decreases, finally, all lights are off, indicating the factory shutting down.

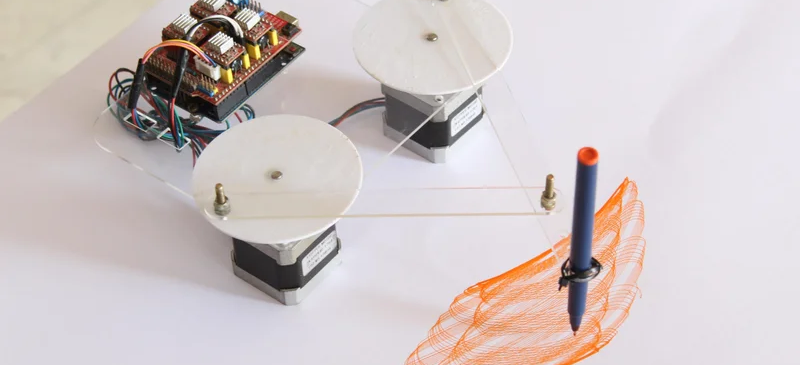

We especially liked the distillation column. We doubt we would exactly duplicate this project, but there are plenty of things to borrow for your own creations here.

We always enjoy steampunk computers. But we also like the ones that have unusual components like the distillation column or a chain.