04

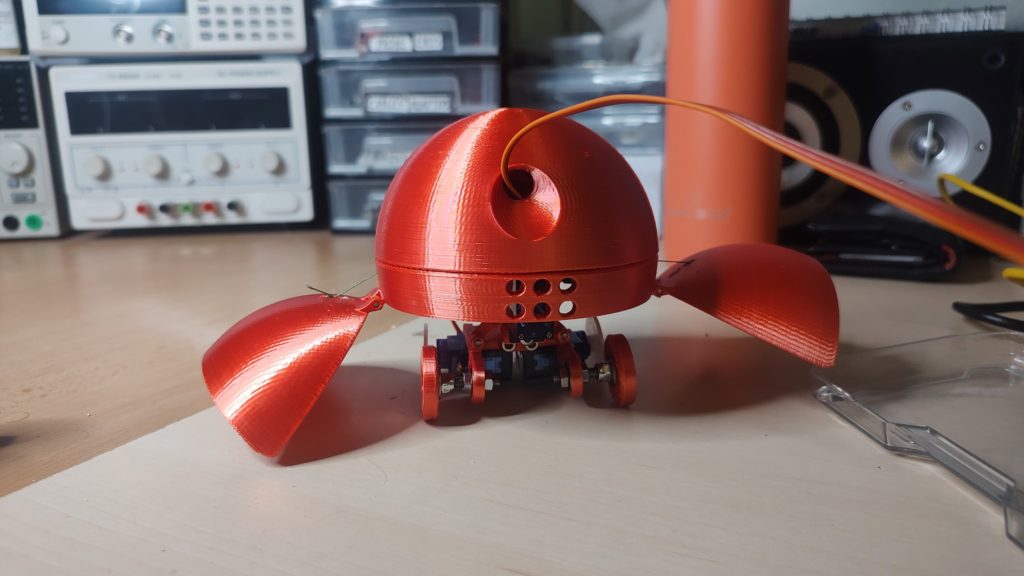

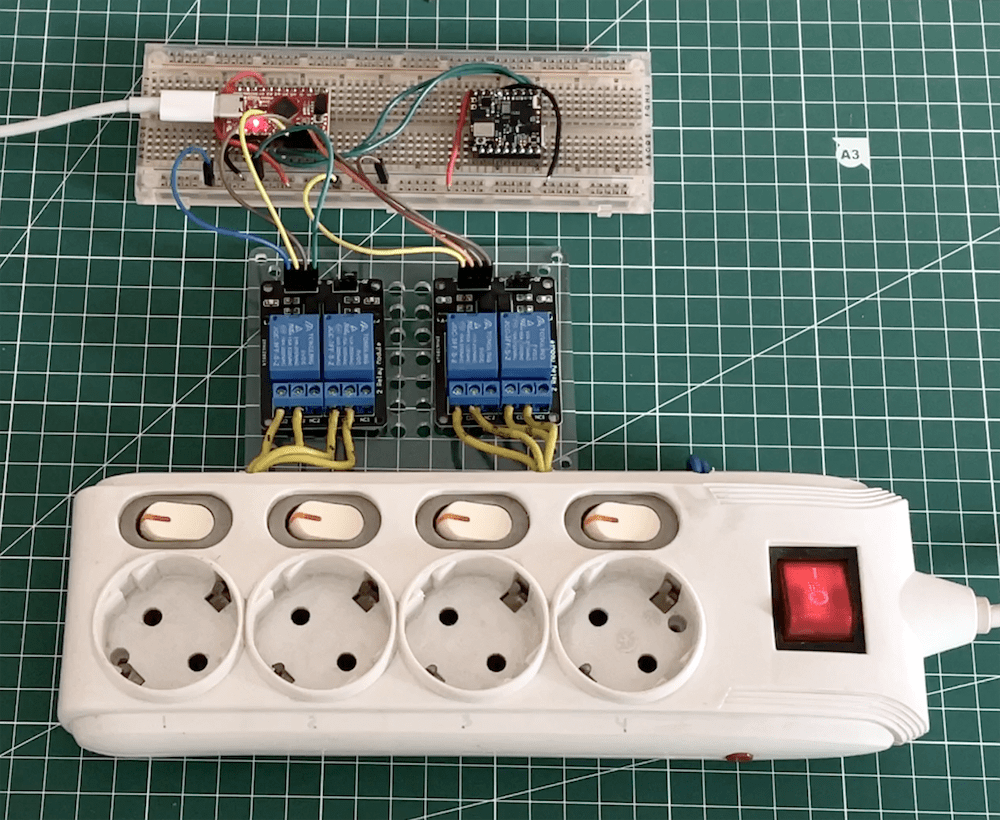

Having constant, reliable access to a working HVAC system is vital for our way of living, as they provide a steady supply of fresh, conditioned air. In an effort to decrease downtime and maintenance costs from failures, Yunior González and Danelis Guillan have developed a prototype device that aims to leverage edge machine learning to predict issues before they occur.

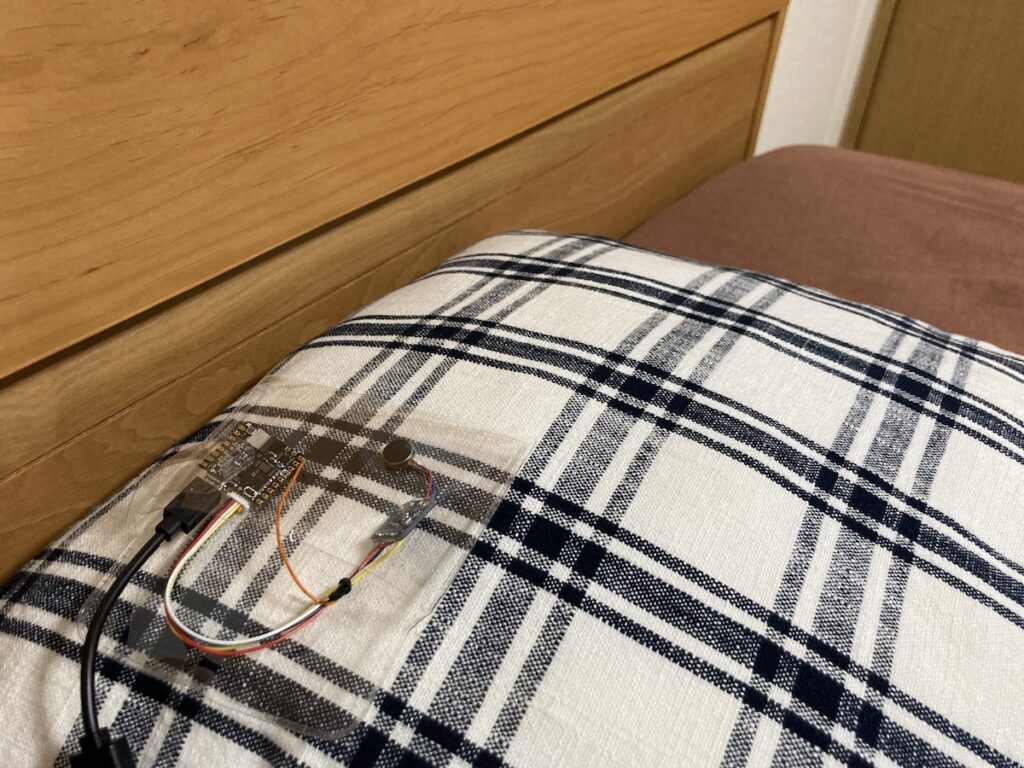

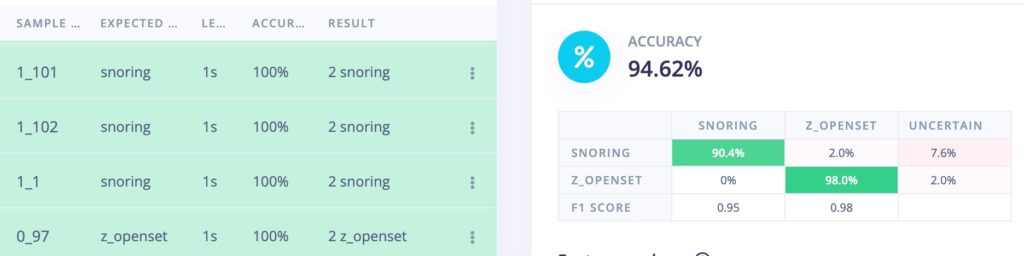

The duo went with a Nicla Sense ME due to its onboard accelerometer, and after collecting many readings from each of the three axes at a 10Hz sampling rate, they imported the data into Edge Impulse to create the model. This time, rather than using a classifier, they utilized a K-means clustering algorithm — which is great at detecting anomalous readings, such as a motor spinning erratically, compared to a steady baseline.

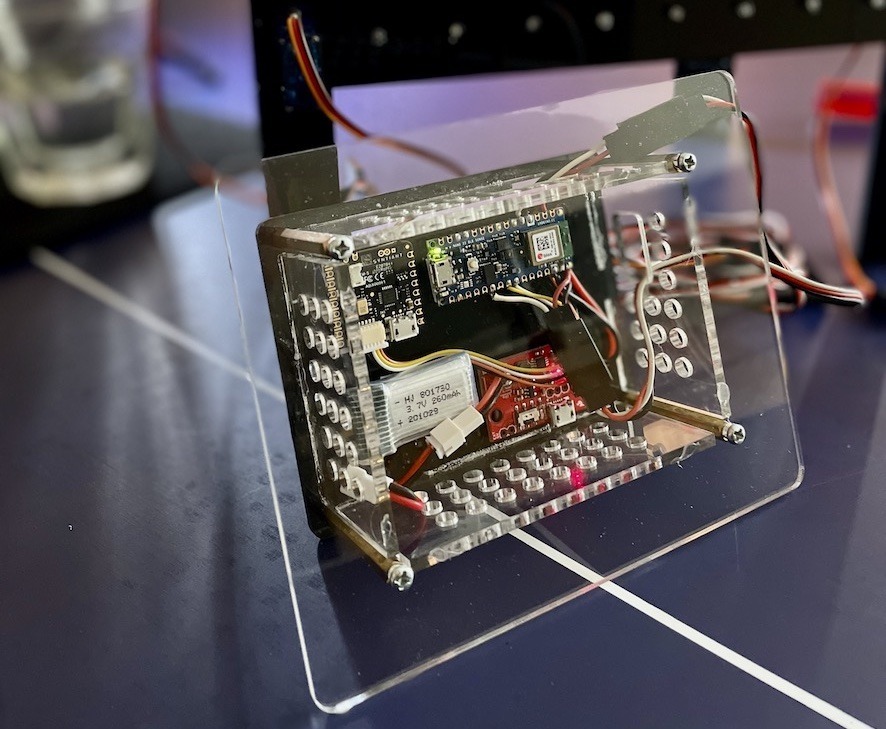

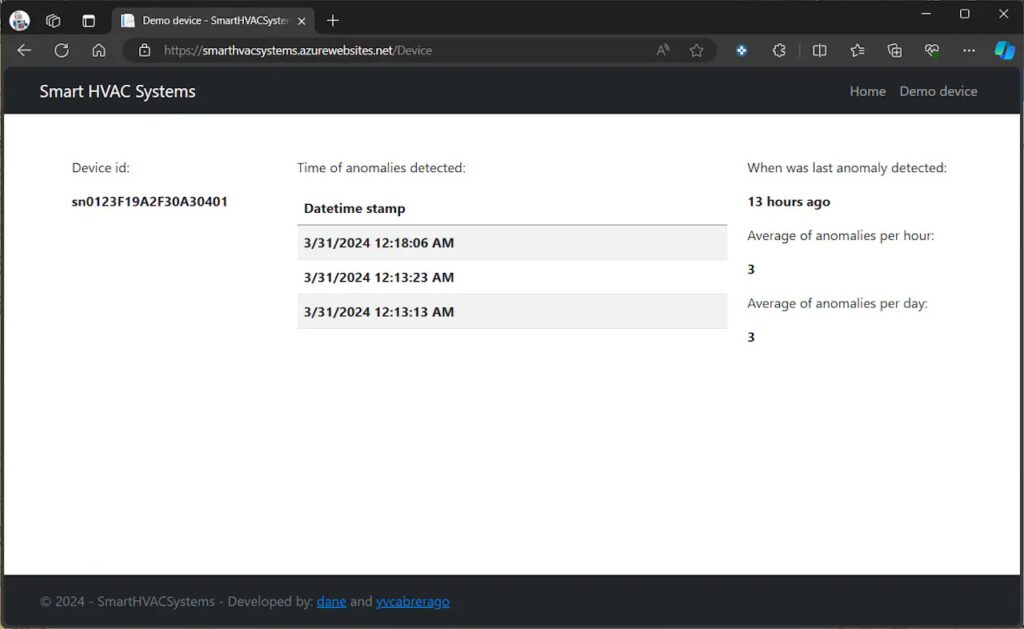

Once the Nicla Sense ME had detected an anomaly, it needed a way to send this data somewhere else and generate an alert. González and Guillan’s setup accomplishes the goal by connecting a Microchip AVR-IoT Cellular Mini board to the Sense ME along with a screen, and upon receiving a digital signal from the Sense ME, the AVR-IoT Cellular Mini logs a failure in an Azure Cosmos DB instance where it can be viewed later on a web app.

To read more about this preventative maintenance project, you can read the pair’s write-up here on Hackster.io.

The post Detecting HVAC failures early with an Arduino Nicla Sense ME and edge ML appeared first on Arduino Blog.